Web scraping, the process of programmatically extracting data from websites, is an invaluable tool across industries for competitive analysis, price aggregation, research, and more. However, it poses unique challenges as websites implement sophisticated anti-scraping technologies to protect their content, user data, and system resources. This article explores common anti-scraping measures deployed by websites, the strategies that can circumvent them, and the challenges faced by scrapers as these defenses evolve.

Anti-Scraping Measures: Techniques and Technologies

Websites employ a variety of techniques to detect and prevent unauthorized scraping. These methods can broadly be categorized into detection mechanisms (passive monitoring and analysis) and active countermeasures (defensive actions to mitigate potential scraping).

1. Challenge-Based Anti-Scraping Techniques

CAPTCHAs

CAPTCHAs (Completely Automated Public Turing Test to Tell Computers and Humans Apart) are one of the most widely used tools to thwart bots. These challenge-response tests ensure that only human users can proceed by requiring tasks such as:

- Identifying text from an image

- Checking the “I’m not a robot” box

- Solving puzzles, completing sliders, or detecting objects in images

Recent Advances:

Modern versions, such as invisible CAPTCHAs (e.g., reCAPTCHA v3), analyze user behavior across sessions to assign risk scores without issuing interactive challenges.

Bypass Strategies:

CAPTCHA-solving services and machine learning algorithms can achieve varying levels of success, but continual CAPTCHA evolution heightens the arms race between bots and defenses.

Challenge-Response Authentication

This method extends beyond CAPTCHAs by asking users to solve diverse puzzles, perform simple math, or interact with non-standard UI elements to verify human presence.

Honeypot Traps

Web pages sometimes include invisible or hidden elements (e.g., text boxes or links) that are intentionally placed to detect bots. While these elements are ignored by human users, bots may inadvertently interact with them, leading to immediate blocks.

Defensive Tactic to Avoid Interaction:

Advanced scrapers can identify these traps by analyzing the DOM (Document Object Model) for unusual or hidden elements and ensuring no interaction.

2. Request-Based Defenses

Rate Limiting

Websites often restrict the number of requests a user can make within a specific timeframe. Exceeding this limit triggers blocks or throttles responses.

Bypass Strategies:

- Employ adaptive throttling to dynamically adjust request frequency.

- Include randomized delays between interactions to mimic natural pacing.

IP Blocking

Suspicious IP addresses—either from data centers, proxies, or exhibiting abnormal traffic patterns—may be flagged and blocked by websites.

Advanced Blocking Methods:

- ASN (Autonomous System Number) Blocking : Blocks entire data center networks.

- Geo-Fencing : Restricts traffic based on geographic regions associated with high bot activity.

Bypass Strategies: To avoid detection:

- Rotate IP addresses and geo-locations using datacenter proxies , residential proxies , or mobile proxies .

- Prioritize rotating proxies associated with residential IP pools to better mimic legitimate users.

IP Session Consistency

Some websites assign session-specific tokens to user devices, associating them with the originating IP address. If subsequent requests lack the token or originate from a different IP address, they may be flagged as suspicious.

Bypass Strategies:

Maintain session consistency by persisting tokens across requests while using the same IP address.

Interval Analysis

Websites may track the frequency of visits and analyze long-term patterns, looking for regular intervals indicative of automated scraping (e.g., cron jobs).

Countermeasures:

Randomize request timing over both short-term and long-term intervals to mirror unpredictable human behavior.

Behavioral Analysis

Websites monitor user interactions to detect bot-like patterns. Abnormal behaviors include:

- Rapid page navigation

- Lack of scrolling, mouse movement, or clicks

- Perfectly regular request timings

Bypass Strategies:

- Throttle requests to avoid rapid navigation.

- Simulate human-like interactions, including scrolling, mouse movement, and keyboard input.

- Use advanced tools, such as Puppeteer or Playwright, to mimic authentic browser behavior.

3. Identification Tactics

User-Agent Detection

Anti-scraping systems analyze the user-agent string in HTTP headers to detect non-human traffic.

Bypass Strategies:

- Rotate user-agent strings for every request to mimic a variety of browsers and devices.

- Ensure alignment with other headers like Accept-Language , DNT (Do Not Track) , and Referer to avoid inconsistencies.

Fingerprinting

Websites collect detailed data about visiting devices to create unique user profiles. Attributes include:

- Browser and device types

- Screen resolution

- Time zone

- Installed fonts, extensions, and plugins

- TLS (Transport Layer Security) and WebGL configuration

Technological Context:

Advanced anti-scraping solutions like PerimeterX or Datadome leverage multi-layer fingerprinting that incorporates audio, canvas, and WebGL rendering differences.

Bypass Strategies:

- Spoof key attributes of device fingerprints (e.g., browser headers, OS, plugins).

- Use anti-detection frameworks, such as Multilogin , to simulate legitimate browsing environments.

4. Resource-Intensive Barriers

JavaScript Challenges

Platforms such as Cloudflare’s firewall issue JavaScript-based tasks—complicated challenges that only real web browsers can execute.

Bypass Strategies:

Headless browsers like Puppeteer or Playwright can execute JavaScript dynamically, emulating real users convincingly.

Regular Structure Changes

Websites frequently alter HTML and CSS structures to disrupt scraping workflows, particularly by randomly renaming DOM elements or changing data nesting patterns.

Bypass Strategies:

- Rely less on fixed HTML selectors; focus on scraping data embedded in APIs or JavaScript objects.

- Use machine learning models to dynamically adapt to structural changes.

URL Analysis

Websites can track the types of pages a user accesses. Repeated visits to data-rich pages (e.g., product or pricing pages) may trigger suspicion.

Bypass Strategies:

Randomize page navigation patterns and ensure proportional visits to non-critical pages.

5. Other Challenges

JavaScript Rendering

Modern web applications using frameworks like React, Angular, or Vue.js often generate content dynamically, requiring scrapers to execute JavaScript to fully render the data.

Mitigation:

Deploy headless browsers or tools like Selenium to render JavaScript, or consider capturing static pre-rendered content where available.

Infinite Scroll & Lazy Loading

Many websites load content dynamically as users scroll down a page or interact with specific elements.

Bypass Strategies:

Automate scrolling using headless browsers, or identify and call the underlying APIs powering data loads.

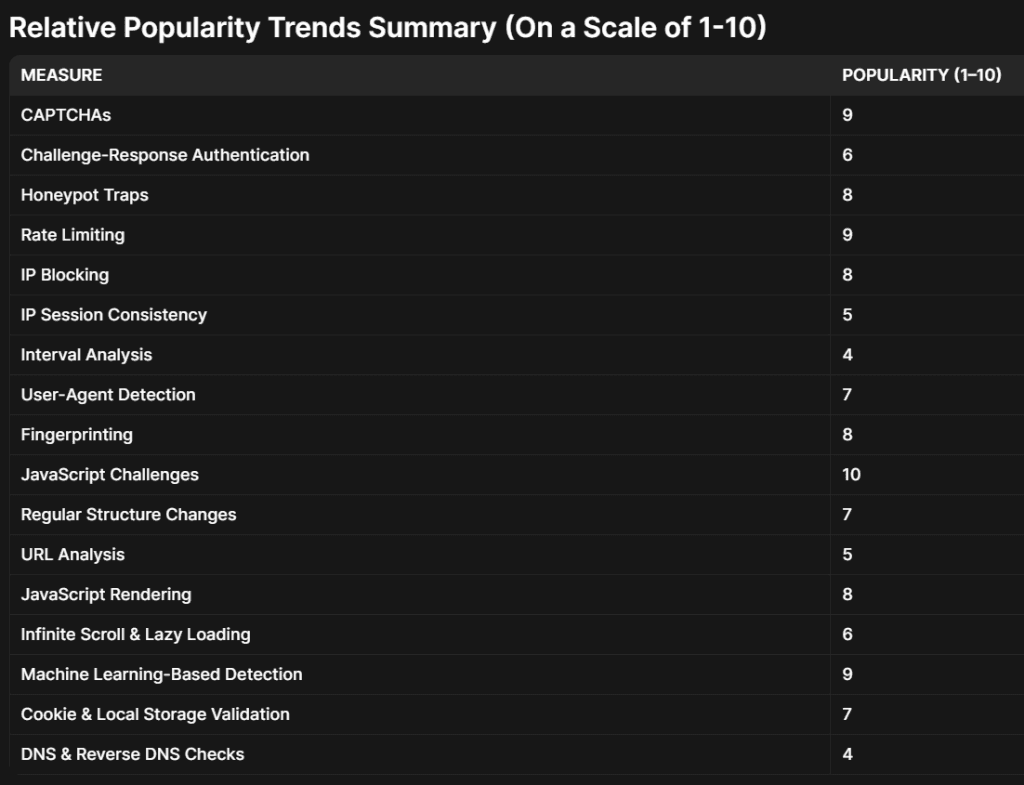

These scores are based on observed trends in anti-scraping implementations, industry reports, and security practices as of 2023 . They serve as a qualitative estimate rather than definitive data. Each website may deploy a combination of these measures, tailored to their risk thresholds, technical infrastructure, and the value of their data, so the actual popularity may vary by context and industry.

Industry Statistics

Volume of Bot Traffic

- Stat: Bots accounted for 47.4% of all internet traffic in 2022 , split between good bots (14.6%) and bad bots (30.2%).

- Context: This highlights the scale of automated activity on the web and the critical need for anti-scraping technologies. Organizations deal with both helpful bots (e.g., search engine crawlers) and harmful ones (e.g., scrapers, spammers).

Bad Bot Sophistication Levels

- Stat: 51.2% of bad bots detected in 2022 were classified as advanced persistent bots , which can mimic human behavior, circumvent CAPTCHAs, and rotate IPs.

- Context: This demonstrates how scrapers and malicious bots are increasingly leveraging sophisticated methods like browser emulation, headless browsers, and machine learning, making anti-scraping more complex.

Industry-Specific Targeting

- Stat: E-commerce (22.1%), travel and airlines (20.9%), and financial services (14.6%) are the most targeted industries by malicious bots.

- Context: These industries are data-rich and highly vulnerable to scraping attacks due to pricing information, inventory availability, or sensitive customer data. Mention this to illustrate how anti-scraping measures are particularly critical in certain sectors.

Prevalence of CAPTCHA Use

- Stat: Over 50% of websites globally use CAPTCHAs or variations like reCAPTCHA as an anti-bot measure. Google’s reCAPTCHA alone has been integrated on 4 million websites worldwide.

- Source: Google reCAPTCHA insights (widely cited in security blogs).

- Context: This highlights CAPTCHAs’ role as one of the most easily accessible and widely adopted defenses against automated activity.

Cost of Bot Activity

- Stat: The economic cost of malicious bot activity, including scraping, account takeovers, and content theft, is estimated to exceed $10 billion annually .

- Source: Various industry reports, including Forrester Research , Imperva insights, and third-party analysts.

- Context: This showcases the financial impact of malicious bots, motivating companies to invest in anti-scraping solutions.

Cloudflare’s Market Penetration

- Stat: Cloudflare provides bot management services for over 20% of the world’s top websites based on Alexa rankings.

- Source: Cloudflare’s quarterly and annual reports.

- Context: Illustrating the dominance of anti-bot tools provided by security-as-a-service providers like Cloudflare creates a strong sense of how large-scale websites approach these challenges.

API Abuse and Scraping

- Stat: API abuse accounts for 40% of all data breaches and is a growing avenue for data scraping.

- Source: Salt Security’s State of API Security Report (2023)

- Context: Scrapers have shifted to APIs as an alternative to direct HTML parsing due to the cleaner and structured nature of APIs, but this also puts them in the spotlight for anti-scraping strategies.

Proxy Service Usage

- Stat: Over 75% of web scrapers use proxies (residential, data center, or mobile) to evade detection. The residential proxy market alone is estimated to be worth $1.4 billion by 2026 .

- Source: Research by MarketWatch and proxy service providers like Bright Data.

- Context: The statistics underline the growing sophistication of scraper strategies and how the proxy industry directly supports scraping activities.

JavaScript Frameworks and SPAs (Single Page Applications)

- Stat: By 2022, 29.8% of the top 1 million websites used JavaScript frameworks like React, Angular, or Vue.js, which render content dynamically.

- Source: W3Techs.com

- Context: JavaScript-heavy websites pose increased challenges for scrapers, requiring advanced tools to execute client-side processing. Mentioning the rise of SPAs emphasizes the growing reliance on resource-intensive barriers like headless browsers.

Infinite Scroll Adoption

- Stat: More than 60% of content-heavy websites (e.g., blogs, media sites, social networks) use infinite scrolling or lazy loading to present data gradually.

- Source: Developer surveys (e.g., Stack Overflow trends, general UX/UI design reports).

- Context: This trend complicates traditional scraping methods as more websites prioritize optimizing user experience over static content delivery.

Demand for Web Data

- Stat: 50%-60% of businesses rely on web scraping for competitor analysis, lead generation, pricing intelligence, or sentiment analysis.

- Source: Findings from reports by Deloitte, Gartner, and scraping-related surveys.

- Context: Despite legal and ethical concerns, the demand for extracted web data continues to grow, creating tension between data consumers and website owners.

Regulatory Trends

- Stat: Over 30% of web scraping cases brought to court revolve around violations of Terms of Service (ToS) or intellectual property law. Significant rulings, such as HiQ Labs vs. LinkedIn (9th Circuit, 2019) , often decide the legality of scraping on a case-by-case basis.

- Source: Legal databases and analysis by organizations like the Electronic Frontier Foundation (EFF).

- Context: The increasing regulatory oversight of scraping activities raises the stakes for businesses deploying scrapers while encouraging better defenses on the part of websites.

Global Top Vendors for Anti-Scraping

- Stat: Among anti-scraping and bot mitigation vendors, tools like Cloudflare, Imperva, PerimeterX, Botify, and Datadome dominate the enterprise market, powering websites with real-time bot-blocking technologies.

- Source: Industry research reports from vendors and analysts.

- Context: This demonstrates the availability and sophistication of purpose-built anti-scraping technologies.

Cost of Anti-Bot Services

- Stat: Enterprises investing in bot management spend between $5,000-$50,000 per month for solutions depending on the traffic volume, complexity, and industry-specific risks.

- Source: Pricing details and customer reviews from anti-bot solution vendors like PerimeterX, Cloudflare, and Imperva.

- Context: Highlighting the financial commitment associated with robust anti-scraping defenses underscores how serious the issue is for high-value websites.

Conclusion

As websites deploy increasingly sophisticated anti-scraping defenses, the challenges for web scrapers continue to grow. Tools like residential proxies, headless browsers, and dynamic fingerprint spoofing play a vital role in staying ahead of detection mechanisms, but the arms race is far from over. Future trends in anti-scraping technologies, such as AI-powered detection and server-side rendering, promise to push both defenders and attackers to new levels of innovation.

By understanding the key anti-scraping techniques and their counter-strategies, organizations can strike a balance between ethical scraping practices and respecting website policies, fostering responsible use of web data across the digital landscape